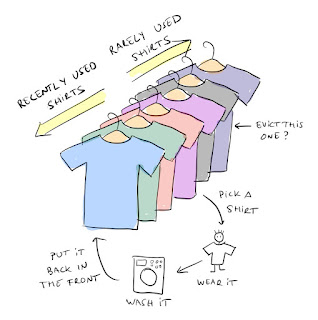

To implement an LRU Cache data structure to store and retrieve data in constant time i.e., O(1). However, We'll have to look for an existing data structure that can perform both store and retrieval processes in almost constant time. HashMap or Hashing generally can help here to efficiently store and retrieve them but with a caveat of not storing them in the least recently used order.

Before jumping into the code snippet, The general idea behind this implementation will require DoublyLinkedList and HashMap internally to make this magic happen.

1. DoublyLinkedList is capable of managing the usage order in almost constant time.

2. HashMap is capable of serving the get and putting a request in almost constant time.

Why not merge them and form an LRU data structure.

class LRUCache {

class DoublyLinkedListNode {

int key;

int value;

DoublyLinkedListNode previous;

DoublyLinkedListNode next;

}

private void addNode(DoublyLinkedListNode node) {

node.previous = head;

node.next = head.next;

head.next.previous = node;

head.next = node;

}

private void removeNode(DoublyLinkedListNode node) {

DoublyLinkedListNode previousNode = node.previous;

DoublyLinkedListNode nextNode = node.next;

previousNode.next = nextNode;

nextNode.previous = previousNode;

}

private void moveToHead(DoublyLinkedListNode node) {

removeNode(node);

addNode(node);

}

private DoublyLinkedListNode popTail() {

DoublyLinkedListNode node = tail.previous;

removeNode(node);

return node;

}

private Map<Integer, DoublyLinkedListNode> cache = new HashMap<>();

private DoublyLinkedListNode head, tail;

private int capacity;

private int size;

public LRUCache(int capacity) {

this.size = 0;

this.capacity = capacity;

head = new DoublyLinkedListNode();

tail = new DoublyLinkedListNode();

head.next = tail;

tail.previous = head;

}

public int get(int key) {

DoublyLinkedListNode cacheNode = cache.get(key);

if(cacheNode == null) return -1;

moveToHead(cacheNode);

return cacheNode.value;

}

public void put(int key, int value) {

DoublyLinkedListNode cacheNode = cache.get(key);

if(cacheNode == null) {

DoublyLinkedListNode newNode = new DoublyLinkedListNode();

newNode.value = value;

newNode.key = key;

addNode(newNode);

cache.put(key, newNode);

++size;

if(size > capacity) {

DoublyLinkedListNode lruNode = popTail();

cache.remove(lruNode.key);

--size;

}

} else {

cacheNode.value = value;

moveToHead(cacheNode);

}

}

}

/**

* Your LRUCache object will be instantiated and called as such:

* LRUCache obj = new LRUCache(capacity);

* int param_1 = obj.get(key);

* obj.put(key,value);

*/